[Gemma-2] Fine-Tune Gemma 2 in Keras Using LoRA

Overview

Gemma is a family of lightweight, state-of-the art open models built from the same research and technology used to create the Gemini models.

In this notebook, we demonstrate how to use KerasNLP to perform LoRA fine-tuning on a Gemma 2B model. The fine-tuning process leverages the Databricks Dolly 15k dataset, a curated collection of 15,000 high-quality, human-generated prompt-response pairs. This dataset is specifically designed to facilitate fine-tuning for large language models (LLMs), making it an excellent choice for this task.

[Vue] Star Rating

[Vue] Address Validation

Introduction

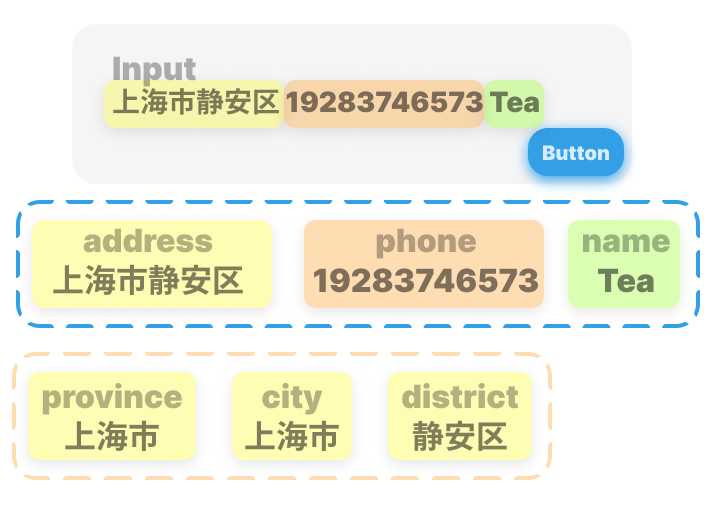

During my internship, my first task was to develop an address validation component that accepts a name, phone number, and address.

This component is designed for address recognition in a Chinese context.

Structure

The component includes an input field for entering the address information and a button to trigger the verification process.

The information will be divided into three sections, as shown in the image below.

[AtCoder] AtCoder Beginner Contest 367

A - Shout Everyday

In the Kingdom of AtCoder, residents are required to shout their love for takoyaki at A o’clock every day.

Takahashi, who lives in the Kingdom of AtCoder, goes to bed at B o’clock and wakes up at C o’clock every day (in the 24-hour clock). He can shout his love for takoyaki when he is awake, but cannot when he is asleep. Determine whether he can shout his love for takoyaki every day. Here, a day has 24 hours, and his sleeping time is less than 24 hours.

[CUMCM] #1 Intro & Prepration

[Google Hackathon 2024] Frontend Plugin Developing: Miss Minutes

Introduction

One month ago, I participated in my first-ever Hackathon organized by SegmentFault and Google. The theme was Innovate for the Future and the competition spanned a considerable amount of time, allowing for deep exploration and development.